Each inference thread invokes a JIT interpreter that executes the ops of a model inline, one by one. From the frequencies of the red cell antigens of Caucasians and American Negroes, the possibilities of antigenic exposure from inter and intra-racial.

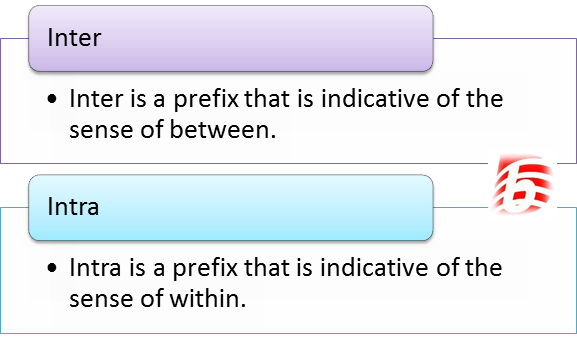

The docs say “PyTorch uses a single thread pool for the inter-op parallelism, this thread pool is shared by all inference tasks that are forked within the application process.”, now the thing I struggle to understand is how does one “fork” a new thread that uses the interop threads form libtorch (I can see in pytorch example you use a _fork() function from jit, but I cant find anything similar in libtorch)? Or does the forking happen somehow automatically inside libtorch code? Another thing the docs say is "One or more inference threads execute a model’s forward pass on the given inputs. Intra is used to indicate something that is happening within the. We can see clear performance difference when setting different number of intraop threads, but basically none with different interop threads. Inter is used when referring to something between two or more than two places or groups.

Onyer vs inyra Offline#

We are testing on different shapes of the network and different sizes of the data. My offline classes at Target Point Academy ,Pannalal Park, Unnao Jai Vardhan Tiwari My Contact Number - 7800539543. To make things clear, we are creating a model procedurally inside c++, right now we are working on simple feed forward nets, where we feed linear modules of varying size and activation functions into a sequential, and use the forward method of sequential module to run inference. I have read trough the docs you linked, but I am not sure if I understand how the interop threads are used. Thanks for the reply, I work on a project together with Jim. So we are wondering what the difference between these two are, how one can utilize the inter-op thread pool, or if you have some nice resources on this topic! Some of the articles we looked at: CPU threading and TorchScript inference - PyTorch 1.9.0 documentation Intra- and inter-operator parallelism in PyTorch (work plan)

As we moniter the time on our machine, we see a performance difference when specifying intra-op thread count, while specifying the inter-op thread count seems to make no difference. They join to the front of other words and make new meaningful words. The main function of these two words inter and intra are same as any other prefix. The meaning of the French word entre is between. After some testing, we are still unsure where difference between the inter- and intra- operations lie. Intermodal and intramodal functioning in the auditory and visual sensory modes within the temporal dimension was investigated with 19 male and 18 female. Meanwhile, the word inter has its origins in the Old French word entre. It seems that libtorch provides two main parallelization options that the user can specify when computing on CPU: the intra-op thread count and the inter-op thread count.

:origin()/pre00/96c6/th/pre/f/2016/024/6/0/___inary____by_sionra-d9p5e5z.png)

Recently we started profiling our models, running on CPU, as we want to get a feel for the parallelization on CPU before moving to GPU. Hi fellow libtorchers! We are working on a project solely using the C++ API.

0 kommentar(er)

0 kommentar(er)